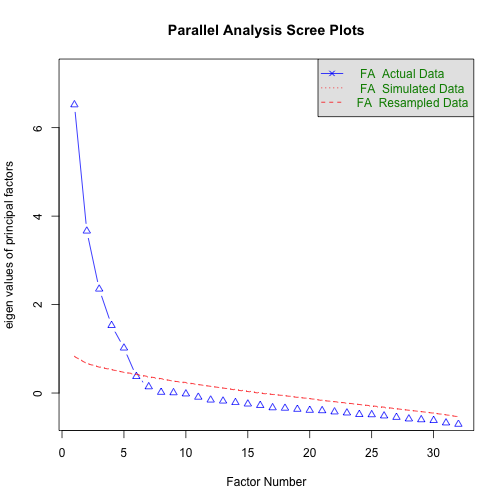

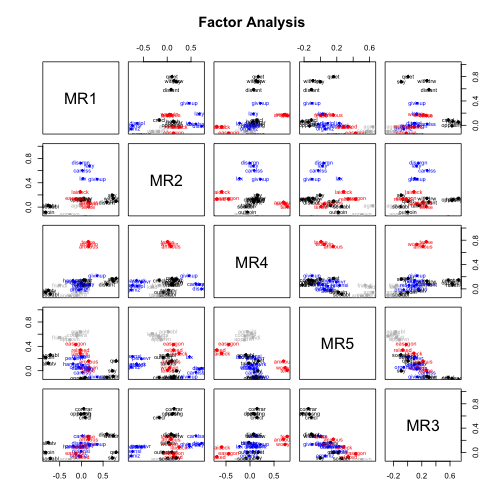

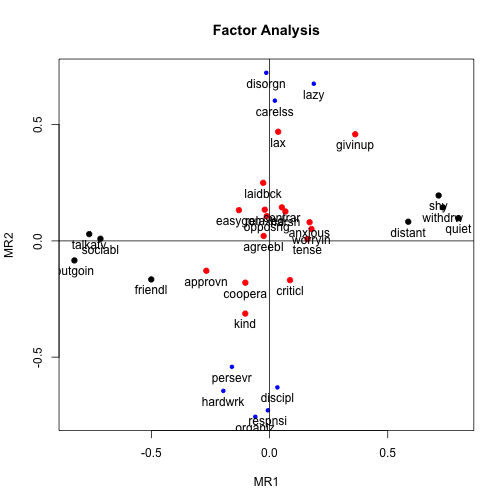

class: center, middle, inverse, title-slide .title[ # Lecture 7: PY 0794 - Advanced Quantitative Research Methods ] .author[ ### Dr. Thomas Pollet, Northumbria University (<a href="mailto:thomas.pollet@northumbria.ac.uk" class="email">thomas.pollet@northumbria.ac.uk</a>) ] .date[ ### 2025-02-17 | <a href="http://tvpollet.github.io/disclaimer">disclaimer</a> ] --- ## PY0794: Advanced Quantitative research methods. * Last lecture: Mediation * Today: Exploratory Factor analysis --- ## Goals (today) Factor analysis Some ways of visualising factor analysis. --- ## Assignment After today you should be able to complete the following sections for Assignment II: Exploratory Factor Analysis and its assumptions. --- ## Factor analysis Who has run an exploratory factor analysis? What was the purpose? <img src="https://media.giphy.com/media/l0HlHqERfyDLQD1p6/giphy.gif" width="300px" style="display: block; margin: auto;" /> --- ## Purpose. We want to study the covariation between a large number of observed variables. 1. How many latent factors would account for most of the variation among the observed variables? 2. Which variables appear to define each factor. What labels could we give to these factors? If the observed covariation can be explained by a small number of factors (e.g., 2-5), this would increase our understanding of the relationships among the variables! --> Reduce complexity and increase understanding. --> validate scale (--> ultimately confirmatory factor analysis). --- ## Terminology. _Exploratory_ vs. confirmatory. Occasionally you will have a very clear idea has to how many factors there should be. In such a case one would usually do confirmatory analysis. <img src="https://media.giphy.com/media/Wt94luq6ZY5tS/giphy.gif" width="300px" style="display: block; margin: auto;" /> --- ## Terminology. Principal components vs. Factor analysis. _"The idea of principal components analysis (PCA) is to find a small number of linear combinations of the variables so as to capture most of the variation in the dataframe as a whole. ... Principal components analysis finds a set of orthogonal standardized linear combinations which together explain all of the variation in the original data. There are as many principal components as there are variables, but typically it is only the first few of them that explain important amounts of the total variation."_ Crawley (2013:809-810) --- ## Terminology: Factor analysis _"With principal components analysis we were fundamentally interested in the variables and their contributions. Factor analysis aims to provide usable numerical values for quantities such as intelligence or social status that are not directly measurable. The idea is to use correlations between observable variables in terms of underlying ‘factors’."_ Crawley (2013:813) Note that factors here means something fundamentally different than factors when we were describing a single variable. (Something can be a factorial variable in an ANOVA design, for example.) Also some researchers will use the terms interchangeably (even though they are separate techniques). --- ## Principal component analysis Today we will mostly deal with factor analysis (should you require principal component analysis, have a look [here](https://www.r-bloggers.com/principal-component-analysis-using-r/) and [here](http://www.rpubs.com/koushikstat/pca)) The mathematics are also described in those sources. --- ## Factor analysis assumptions. In essence you can think of factor analysis as OLS regression, which means similar assumptions apply. **Measurement**: All variables should be interval. No dummy variables. No outliers. **Sample size**: >200 , although some advocate 5-10 per variable but see [this](http://people.musc.edu/~elg26/teaching/psstats1.2006/maccallumetal.pdf) on rules of thumb. **Multivariate normality**: Though not necessary required for exploratory factor analysis, useful to check. **Linear**: the proposed relationships are linear. **Factorability**: There should be some correlations which can be meaningfully grouped together. More on assumptions [here](https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3290828/pdf/fpsyg-03-00055.pdf) --- ## Data Data were from [here](https://quantdev.ssri.psu.edu/) but are now available [here](https://tvpollet.github.io/PY_0794/Lecture_7/personality0.txt). 240 participants providing self-ratings (1-9) on 32 variables. ``` r # Downloaded the data into my work directory. f_data <- read.table("personality0.txt") require(stargazer) stargazer(f_data, type = "html", out= "factor_data.html") ``` --- ## Measurement and sample size Measurement and sample sizes OK. (Though 1 to 9, one can always question how 'interval' that really is. 1 to 7 would be worse and that is commonly used) <img src="https://media.giphy.com/media/3oz8xQQP4ahKiyuxHy/giphy.gif" width="300px" style="display: block; margin: auto;" /> --- ## Multivariate normality. As an aside. This is not looking great... . We will ignore it for now, given that we are conducting *exploratory* factor analysis. ``` r require(MVN) mvn(f_data) ``` ``` ## $multivariateNormality ## Test HZ p value MVN ## 1 Henze-Zirkler 1.000172 0 NO ## ## $univariateNormality ## Test Variable Statistic p value Normality ## 1 Anderson-Darling distant 6.9751 <0.001 NO ## 2 Anderson-Darling talkatv 4.2917 <0.001 NO ## 3 Anderson-Darling carelss 6.8577 <0.001 NO ## 4 Anderson-Darling hardwrk 8.0398 <0.001 NO ## 5 Anderson-Darling anxious 4.0905 <0.001 NO ## 6 Anderson-Darling agreebl 7.0108 <0.001 NO ## 7 Anderson-Darling tense 4.3169 <0.001 NO ## 8 Anderson-Darling kind 7.7714 <0.001 NO ## 9 Anderson-Darling opposng 5.2942 <0.001 NO ## 10 Anderson-Darling relaxed 3.5136 <0.001 NO ## 11 Anderson-Darling disorgn 5.1948 <0.001 NO ## 12 Anderson-Darling outgoin 4.8684 <0.001 NO ## 13 Anderson-Darling approvn 5.6728 <0.001 NO ## 14 Anderson-Darling shy 3.7198 <0.001 NO ## 15 Anderson-Darling discipl 4.8654 <0.001 NO ## 16 Anderson-Darling harsh 6.7987 <0.001 NO ## 17 Anderson-Darling persevr 6.2778 <0.001 NO ## 18 Anderson-Darling friendl 8.4708 <0.001 NO ## 19 Anderson-Darling worryin 5.1901 <0.001 NO ## 20 Anderson-Darling respnsi 9.0694 <0.001 NO ## 21 Anderson-Darling contrar 4.7126 <0.001 NO ## 22 Anderson-Darling sociabl 6.3069 <0.001 NO ## 23 Anderson-Darling lazy 3.3986 <0.001 NO ## 24 Anderson-Darling coopera 7.6556 <0.001 NO ## 25 Anderson-Darling quiet 3.4238 <0.001 NO ## 26 Anderson-Darling organiz 3.9451 <0.001 NO ## 27 Anderson-Darling criticl 4.3845 <0.001 NO ## 28 Anderson-Darling lax 4.7180 <0.001 NO ## 29 Anderson-Darling laidbck 3.5410 <0.001 NO ## 30 Anderson-Darling withdrw 6.2122 <0.001 NO ## 31 Anderson-Darling givinup 10.0194 <0.001 NO ## 32 Anderson-Darling easygon 4.8535 <0.001 NO ## ## $Descriptives ## n Mean Std.Dev Median Min Max 25th 75th Skew Kurtosis ## distant 240 3.866667 1.794615 3 1 8 2.00 5 0.21660329 -1.115187889 ## talkatv 240 5.883333 1.677732 6 2 9 5.00 7 -0.18189899 -0.748747155 ## carelss 240 3.412500 1.811357 3 1 9 2.00 5 0.66867120 -0.309281507 ## hardwrk 240 6.925000 1.370108 7 2 9 6.00 8 -0.80831996 0.614831474 ## anxious 240 5.129167 1.880305 5 1 9 4.00 7 -0.09210485 -0.771926581 ## agreebl 240 6.629167 1.372162 7 1 9 6.00 8 -0.78725929 1.042140998 ## tense 240 4.616667 1.904337 5 1 9 3.00 6 -0.02518179 -0.925252081 ## kind 240 6.970833 1.262255 7 2 9 6.00 8 -0.70373663 0.767591124 ## opposng 240 3.858333 1.599141 4 1 8 3.00 5 0.46913404 -0.293884606 ## relaxed 240 5.475000 1.694009 5 1 9 4.00 7 -0.08833270 -0.507015321 ## disorgn 240 4.083333 2.126082 4 1 9 2.00 6 0.16985907 -1.065496081 ## outgoin 240 6.020833 1.809894 6 2 9 5.00 7 -0.34291775 -0.761161418 ## approvn 240 5.858333 1.367867 6 2 9 5.00 7 -0.13555584 -0.154427814 ## shy 240 4.558333 1.969626 5 1 9 3.00 6 0.06063502 -0.919119555 ## discipl 240 6.308333 1.725011 7 1 9 5.00 7 -0.56730380 0.145322024 ## harsh 240 3.600000 1.683789 3 1 8 2.00 5 0.45079493 -0.736948487 ## persevr 240 6.804167 1.405006 7 2 9 6.00 8 -0.62497986 0.642059811 ## friendl 240 7.250000 1.155304 7 2 9 7.00 8 -0.59175740 1.210961241 ## worryin 240 5.212500 2.108126 6 1 9 3.00 7 -0.07134918 -1.139934280 ## respnsi 240 7.291667 1.395725 8 1 9 7.00 8 -1.21576315 2.252882721 ## contrar 240 3.770833 1.500900 4 1 8 3.00 5 0.22186635 -0.472970716 ## sociabl 240 6.445833 1.567579 7 2 9 5.00 8 -0.64011468 0.061296675 ## lazy 240 4.179167 1.893941 4 1 9 3.00 5 0.20658153 -0.597716553 ## coopera 240 6.695833 1.197619 7 3 9 6.00 7 -0.37705887 0.004767416 ## quiet 240 4.604167 1.880750 5 1 9 3.00 6 0.15018658 -0.728162291 ## organiz 240 6.154167 1.963363 6 1 9 5.00 8 -0.45913660 -0.438841538 ## criticl 240 5.170833 1.745282 5 1 9 4.00 6 -0.21890441 -0.556012334 ## lax 240 4.083333 1.664713 4 1 9 3.00 5 0.41571022 -0.088209525 ## laidbck 240 5.245833 1.790837 5 1 9 4.00 7 -0.13048078 -0.522778720 ## withdrw 240 3.754167 1.769684 3 1 7 2.00 5 0.17034350 -1.142037389 ## givinup 240 2.675000 1.553307 2 1 8 1.75 4 1.00112342 0.564770534 ## easygon 240 6.066667 1.601429 6 2 9 5.00 7 -0.41865452 -0.324220228 ``` --- ## Plot <img src="Lecture7_xaringan_files/figure-html/unnamed-chunk-7-1.png" width="400px" style="display: block; margin: auto;" /> ``` ## $multivariateNormality ## Test HZ p value MVN ## 1 Henze-Zirkler 1.000172 0 NO ## ## $univariateNormality ## Test Variable Statistic p value Normality ## 1 Anderson-Darling distant 6.9751 <0.001 NO ## 2 Anderson-Darling talkatv 4.2917 <0.001 NO ## 3 Anderson-Darling carelss 6.8577 <0.001 NO ## 4 Anderson-Darling hardwrk 8.0398 <0.001 NO ## 5 Anderson-Darling anxious 4.0905 <0.001 NO ## 6 Anderson-Darling agreebl 7.0108 <0.001 NO ## 7 Anderson-Darling tense 4.3169 <0.001 NO ## 8 Anderson-Darling kind 7.7714 <0.001 NO ## 9 Anderson-Darling opposng 5.2942 <0.001 NO ## 10 Anderson-Darling relaxed 3.5136 <0.001 NO ## 11 Anderson-Darling disorgn 5.1948 <0.001 NO ## 12 Anderson-Darling outgoin 4.8684 <0.001 NO ## 13 Anderson-Darling approvn 5.6728 <0.001 NO ## 14 Anderson-Darling shy 3.7198 <0.001 NO ## 15 Anderson-Darling discipl 4.8654 <0.001 NO ## 16 Anderson-Darling harsh 6.7987 <0.001 NO ## 17 Anderson-Darling persevr 6.2778 <0.001 NO ## 18 Anderson-Darling friendl 8.4708 <0.001 NO ## 19 Anderson-Darling worryin 5.1901 <0.001 NO ## 20 Anderson-Darling respnsi 9.0694 <0.001 NO ## 21 Anderson-Darling contrar 4.7126 <0.001 NO ## 22 Anderson-Darling sociabl 6.3069 <0.001 NO ## 23 Anderson-Darling lazy 3.3986 <0.001 NO ## 24 Anderson-Darling coopera 7.6556 <0.001 NO ## 25 Anderson-Darling quiet 3.4238 <0.001 NO ## 26 Anderson-Darling organiz 3.9451 <0.001 NO ## 27 Anderson-Darling criticl 4.3845 <0.001 NO ## 28 Anderson-Darling lax 4.7180 <0.001 NO ## 29 Anderson-Darling laidbck 3.5410 <0.001 NO ## 30 Anderson-Darling withdrw 6.2122 <0.001 NO ## 31 Anderson-Darling givinup 10.0194 <0.001 NO ## 32 Anderson-Darling easygon 4.8535 <0.001 NO ## ## $Descriptives ## n Mean Std.Dev Median Min Max 25th 75th Skew Kurtosis ## distant 240 3.866667 1.794615 3 1 8 2.00 5 0.21660329 -1.115187889 ## talkatv 240 5.883333 1.677732 6 2 9 5.00 7 -0.18189899 -0.748747155 ## carelss 240 3.412500 1.811357 3 1 9 2.00 5 0.66867120 -0.309281507 ## hardwrk 240 6.925000 1.370108 7 2 9 6.00 8 -0.80831996 0.614831474 ## anxious 240 5.129167 1.880305 5 1 9 4.00 7 -0.09210485 -0.771926581 ## agreebl 240 6.629167 1.372162 7 1 9 6.00 8 -0.78725929 1.042140998 ## tense 240 4.616667 1.904337 5 1 9 3.00 6 -0.02518179 -0.925252081 ## kind 240 6.970833 1.262255 7 2 9 6.00 8 -0.70373663 0.767591124 ## opposng 240 3.858333 1.599141 4 1 8 3.00 5 0.46913404 -0.293884606 ## relaxed 240 5.475000 1.694009 5 1 9 4.00 7 -0.08833270 -0.507015321 ## disorgn 240 4.083333 2.126082 4 1 9 2.00 6 0.16985907 -1.065496081 ## outgoin 240 6.020833 1.809894 6 2 9 5.00 7 -0.34291775 -0.761161418 ## approvn 240 5.858333 1.367867 6 2 9 5.00 7 -0.13555584 -0.154427814 ## shy 240 4.558333 1.969626 5 1 9 3.00 6 0.06063502 -0.919119555 ## discipl 240 6.308333 1.725011 7 1 9 5.00 7 -0.56730380 0.145322024 ## harsh 240 3.600000 1.683789 3 1 8 2.00 5 0.45079493 -0.736948487 ## persevr 240 6.804167 1.405006 7 2 9 6.00 8 -0.62497986 0.642059811 ## friendl 240 7.250000 1.155304 7 2 9 7.00 8 -0.59175740 1.210961241 ## worryin 240 5.212500 2.108126 6 1 9 3.00 7 -0.07134918 -1.139934280 ## respnsi 240 7.291667 1.395725 8 1 9 7.00 8 -1.21576315 2.252882721 ## contrar 240 3.770833 1.500900 4 1 8 3.00 5 0.22186635 -0.472970716 ## sociabl 240 6.445833 1.567579 7 2 9 5.00 8 -0.64011468 0.061296675 ## lazy 240 4.179167 1.893941 4 1 9 3.00 5 0.20658153 -0.597716553 ## coopera 240 6.695833 1.197619 7 3 9 6.00 7 -0.37705887 0.004767416 ## quiet 240 4.604167 1.880750 5 1 9 3.00 6 0.15018658 -0.728162291 ## organiz 240 6.154167 1.963363 6 1 9 5.00 8 -0.45913660 -0.438841538 ## criticl 240 5.170833 1.745282 5 1 9 4.00 6 -0.21890441 -0.556012334 ## lax 240 4.083333 1.664713 4 1 9 3.00 5 0.41571022 -0.088209525 ## laidbck 240 5.245833 1.790837 5 1 9 4.00 7 -0.13048078 -0.522778720 ## withdrw 240 3.754167 1.769684 3 1 7 2.00 5 0.17034350 -1.142037389 ## givinup 240 2.675000 1.553307 2 1 8 1.75 4 1.00112342 0.564770534 ## easygon 240 6.066667 1.601429 6 2 9 5.00 7 -0.41865452 -0.324220228 ``` --- ## Linearity You can do all pairwise scatterplots but with range 1-9 this is not wholly useful. We will just assume linearity is reasonable. (Helped by our central limit theorem!) ``` r require(ggplot2) require(GGally) ggpairs(f_data[,1:4]) # example ``` <img src="Lecture7_xaringan_files/figure-html/unnamed-chunk-8-1.png" width="300px" style="display: block; margin: auto;" /> --- ## Factorability. Here we want Bartlett's test to be significant! Why? ``` r bartlett.test(f_data) ``` ``` ## ## Bartlett test of homogeneity of variances ## ## data: f_data ## Bartlett's K-squared = 350.08, df = 31, p-value < 2.2e-16 ``` --- ## Sample write up Bartlett's test for sphericity was significant suggesting that factor analysis is appropriate ( `\(\chi^2\)`(31) = 350.1, _p_ < .0001). --- ## KMO-test Kaiser-Meyer-Olkin factor adequacy ranges from 0 to 1. All should be >.5 [(Kaiser, 1977)](http://jaltcue.org/files/articles/Kaiser1974_an_index_of_factorial_simplicity.pdf) ``` r require(psych) KMO(f_data) ``` ``` ## Kaiser-Meyer-Olkin factor adequacy ## Call: KMO(r = f_data) ## Overall MSA = 0.84 ## MSA for each item = ## distant talkatv carelss hardwrk anxious agreebl tense kind opposng relaxed ## 0.88 0.86 0.82 0.87 0.82 0.73 0.84 0.81 0.79 0.86 ## disorgn outgoin approvn shy discipl harsh persevr friendl worryin respnsi ## 0.75 0.87 0.89 0.87 0.84 0.85 0.86 0.87 0.81 0.86 ## contrar sociabl lazy coopera quiet organiz criticl lax laidbck withdrw ## 0.83 0.90 0.89 0.83 0.87 0.78 0.87 0.81 0.73 0.90 ## givinup easygon ## 0.89 0.78 ``` --- ## Interpretations 0.00 to 0.49 unacceptable. 0.50 to 0.59 miserable. 0.60 to 0.69 mediocre. 0.70 to 0.79 middling. 0.80 to 0.89 meritorious. 0.90 to 1.00 marvelous. --- ## Sample write-up All 32 items showed middling to meritorious adequacy for factor analysis (all MSA `\(\geq\)` .72). --- ## Try it yourself. Download the data from [here](https://stats.idre.ucla.edu/wp-content/uploads/2016/02/M255.sav). Data are ratings of instructors from a study by Sidanius. Read in the data, make a subset with items 13:24 (Hint: use the select function from dplyr and num_range) and conduct a KMO test. --- ## ggcorrplot ``` r # ggcorrplot, you can then further tweak this, as it is a ggplot. require(ggcorrplot) require(dplyr) # take the absolute, interested in strength. cormatrix<-abs(cor(f_data[,1:20])) corplot<-ggcorrplot(cormatrix, hc.order = TRUE, type = "lower", method = "circle") ``` --- ## Plot <img src="Lecture7_xaringan_files/figure-html/unnamed-chunk-12-1.png" style="display: block; margin: auto;" /> --- ## Some further terms. See [here](https://quantdev.ssri.psu.edu/tutorials/intro-basic-exploratory-factor-analysis) **Factor**: An underlying or latent construct causing the observed variables, to a greater or lesser extent. A factor is estimated by a linear combination of out observed variables. When the ‘best fitting’ factors are found, it should be remembered that these factors are not unique! It can be shown that _any_ rotation of the best-fitting factors is also best-fitting. ‘Interpretability’ is used to select the ‘best’ rotation among the equally ‘good’ rotations: To be useful, factors should be interpretable. Rotation of factors is used to improve the interpretability of factors. So once we have extracted the factors, we will rotate them. **Factor loadings**: The degree to which the variable is driven or ‘caused’ by the factor. --- ## More terms. **Factor score/weights**: These can be estimated for each factor. This can then be added to your dataframe. Basically a score for your participant on each factor. Occasionally, one would then use those scores in further analyses. **Communality of a variable**: The extent to which the variability across participants in a variable is ‘explained’ by the set of factors extracted in the factor analysis. Uniqueness = 1-Communality. --- ## Basic factor analysis Varimax rotation. Let's start by getting 8 factors. (Request large number and then trim down!) ``` r require(psych) fa <- fa(f_data,8, fm = 'minres', rotate='varimax', fa = 'fa') ``` --- ## Output ``` r sink('fa_output.text') fa sink() ``` --- ## Number of factors. We need to determine the number of factors. Many options! From Revelle (2017:37): 1) Extracting factors until the `\(\chi^2\)` of the residual matrix is not significant. 2) Extracting factors until the change in `\(\chi^2\)` from factor n to factor n+1 is not significant. 3) Extracting factors until the eigen values of the real data are less than the corresponding eigen values of a random data set of the same size (parallel analysis) fa.parallel (Horn, 1965). 4) Plotting the magnitude of the successive eigen values and applying the scree test (a sudden drop in eigen values analogous to the change in slope seen when scrambling up the talus slope of a mountain and approaching the rock face (Cattell, 1966). --- ## Continued... . 5) Extracting factors as long as they are interpretable. 6) Using the Very Simple Structure Criterion (vss) (Revelle and Rocklin, 1979). 7) Using Wayne Velicer’s Minimum Average Partial (MAP) criterion (Velicer, 1976). 8) Extracting principal components until the eigen value < 1 (Kaiser criterion). --- ## Which one? Each has advantages and disadvantages. 8) although common is probably the worst. Read more [here](http://personality-project.org/r/psych/HowTo/factor.pdf) <img src="https://media.giphy.com/media/3o6gDSdED1B5wjC2Gc/giphy.gif" width="400px" style="display: block; margin: auto;" /> --- ## Parallel analysis. Also part of the output already. Here I used 'minres' as extraction method. Parallel analysis suggests 5 factors (compare red line to blue triangle) ``` r require(psych) parallel <- fa.parallel(f_data, fm = 'minres', fa = 'fa') ``` <img src="Lecture7_xaringan_files/figure-html/unnamed-chunk-16-1.png" style="display: block; margin: auto;" /> ``` ## Parallel analysis suggests that the number of factors = 5 and the number of components = NA ``` --- ## Parallel output. ``` r parallel ``` ``` ## Call: fa.parallel(x = f_data, fm = "minres", fa = "fa") ## Parallel analysis suggests that the number of factors = 5 and the number of components = NA ## ## Eigen Values of ## ## eigen values of factors ## [1] 6.52 3.66 2.35 1.53 1.02 0.38 0.14 0.02 0.01 -0.02 -0.09 -0.16 ## [13] -0.18 -0.21 -0.25 -0.28 -0.33 -0.34 -0.37 -0.39 -0.40 -0.43 -0.45 -0.48 ## [25] -0.49 -0.52 -0.55 -0.58 -0.60 -0.62 -0.67 -0.70 ## ## eigen values of simulated factors ## [1] 0.86 0.68 0.60 0.52 0.46 0.41 0.36 0.31 0.26 0.23 0.20 0.16 ## [13] 0.12 0.08 0.04 0.00 -0.04 -0.07 -0.10 -0.14 -0.17 -0.20 -0.23 -0.25 ## [25] -0.28 -0.32 -0.36 -0.39 -0.42 -0.45 -0.49 -0.53 ## ## eigen values of components ## [1] 7.24 4.53 3.12 2.33 1.88 1.19 0.93 0.86 0.80 0.71 0.69 0.64 0.63 0.54 0.51 ## [16] 0.47 0.46 0.45 0.44 0.41 0.39 0.37 0.33 0.30 0.29 0.28 0.25 0.23 0.22 0.20 ## [31] 0.17 0.13 ## ## eigen values of simulated components ## [1] NA ``` --- ## Kaiser criterion. Kaiser criterion is the number of eigenvalues >1. This can be seen on the graph. That would suggest 5 factors. ``` r parallel$fa.values ``` ``` ## [1] 6.518632220 3.663879765 2.352072662 1.529192200 1.019134280 ## [6] 0.375145536 0.143123374 0.017903052 0.008116657 -0.018428192 ## [11] -0.092557391 -0.157077647 -0.179716109 -0.214661107 -0.246564997 ## [16] -0.279092818 -0.331472781 -0.341440377 -0.366177997 -0.388743904 ## [21] -0.397539896 -0.425290978 -0.449044173 -0.483146864 -0.488668863 ## [26] -0.516074439 -0.548458000 -0.584031532 -0.602210864 -0.619056368 ## [31] -0.674287746 -0.704824040 ``` --- ## Scree plot Depending on the _elbow_ of the graph (Scree Criterion) you would extract 4 or 5. <!-- --> ``` ## Parallel analysis suggests that the number of factors = 5 and the number of components = NA ``` --- ## VSS / Map test The VSS plot suggests 3 factors. Very little improvement with 4. MAP suggests 6 factors. Output printed to console, check [here](https://tvpollet.github.io/PY_0782/VSS_output.txt) ``` r require(psych) VSS(f_data, rotate= "varimax", n.obs= 240)# shows plot ``` <img src="Lecture7_xaringan_files/figure-html/unnamed-chunk-20-1.png" style="display: block; margin: auto;" /> ``` ## ## Very Simple Structure ## Call: vss(x = x, n = n, rotate = rotate, diagonal = diagonal, fm = fm, ## n.obs = n.obs, plot = plot, title = title, use = use, cor = cor) ## VSS complexity 1 achieves a maximimum of 0.62 with 3 factors ## VSS complexity 2 achieves a maximimum of 0.81 with 5 factors ## ## The Velicer MAP achieves a minimum of 0.02 with 6 factors ## BIC achieves a minimum of -1194.35 with 6 factors ## Sample Size adjusted BIC achieves a minimum of -210.21 with 8 factors ## ## Statistics by number of factors ## vss1 vss2 map dof chisq prob sqresid fit RMSEA BIC SABIC complex ## 1 0.52 0.00 0.045 464 2764 1.2e-322 47.7 0.52 0.144 221 1692 1.0 ## 2 0.60 0.72 0.033 433 1992 5.7e-198 27.5 0.72 0.122 -381 992 1.3 ## 3 0.61 0.78 0.024 403 1395 2.3e-109 17.9 0.82 0.101 -814 463 1.4 ## 4 0.61 0.80 0.018 374 967 3.9e-54 12.5 0.87 0.081 -1082 103 1.6 ## 5 0.62 0.81 0.015 346 711 2.2e-27 9.1 0.91 0.066 -1186 -89 1.5 ## 6 0.56 0.79 0.015 319 554 6.8e-15 7.7 0.92 0.055 -1194 -183 1.7 ## 7 0.54 0.77 0.016 293 467 3.9e-10 7.0 0.93 0.050 -1139 -210 1.8 ## 8 0.56 0.77 0.018 268 409 5.9e-08 6.3 0.94 0.047 -1060 -210 1.8 ## eChisq SRMR eCRMS eBIC ## 1 6450 0.165 0.170 3907 ## 2 3021 0.113 0.121 648 ## 3 1517 0.080 0.089 -691 ## 4 767 0.057 0.065 -1283 ## 5 355 0.039 0.046 -1541 ## 6 230 0.031 0.039 -1519 ## 7 176 0.027 0.035 -1430 ## 8 140 0.024 0.033 -1329 ``` --- ## Try it yourself. Conduct a factor analysis extracting a large number of factors (6) on the Sidanius data and store it. Discuss the output with your neigbour. Run a parallel analysis. Discuss the outcome with your neighbour. --- ## Back to the self-description data: Three- and five-factor solution Let's extract one with three factors though 5 could also be workable. ``` r fa_3<-fa(f_data,3, fm = 'minres', rotate='varimax', fa = 'fa') sink('fa_3_output.txt') fa_3 sink() fa_5<-fa(f_data,5, fm = 'minres', rotate='varimax', fa = 'fa') sink('fa_5_output.txt') fa_5 sink() ``` --- ## Diagram. ``` r fa.diagram(fa_5, marg=c(.01,.01,1,.01)) ``` <img src="Lecture7_xaringan_files/figure-html/unnamed-chunk-22-1.png" style="display: block; margin: auto;" /> --- ## Factors. How would you label those 5 factors? ``` r require(semPlot) semplot1<-semPlotModel(fa_5$loadings) semPaths(semplot1, what="std", layout="circle", nCharNodes = 6) ``` <img src="Lecture7_xaringan_files/figure-html/unnamed-chunk-23-1.png" style="display: block; margin: auto;" /> --- ## Try it yourself. Make a plot for your item scores. <img src="https://media.giphy.com/media/hTTnsRaU1geWuvUUjB/giphy.gif" width="400px" style="display: block; margin: auto;" /> --- ## Fit indices. RMSEA and Tucker-Lewis Index (TLI). A widely used cut-off for RMSEA is .06 [(Hu & Bentler, 1999)](http://www.tandfonline.com/doi/abs/10.1080/10705519909540118), others suggest .08 as acceptable. But beware of [cut-offs](https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2743032/). RMSEA is sample size dependent more so than TLI For the Tucker-Lewis Index >.9 or >.95 is considered a good fit. Again beware of cut-offs. Other indices also exist and we will discuss those when we move to SEM. --- ## Back to the models. The five factor model does better than the three factor model. But beware exploratory rather than confirmatory. **Sample description**: While the five factor model could be considered a close fit in RMSEA (.071), it was not in terms of TLI (.849). --- ## Extraction methods: choice paralysis. There are many methods. Most of the time you'll get [similar results](https://stats.stackexchange.com/questions/50745/best-factor-extraction-methods-in-factor-analysis/50758) `fm`= minres factor analysis, principal axis factor analysis, weighted least squares factor analysis, generalized least squares factor analysis and maximum likelihood factor analysis. Minres and Principal Axis factoring are commonly used. --- ## Principal Axis Factoring ``` r require(psych) parallel <- fa.parallel(f_data, fm = 'pa', fa = 'fa') ``` <img src="Lecture7_xaringan_files/figure-html/unnamed-chunk-25-1.png" style="display: block; margin: auto;" /> ``` ## Parallel analysis suggests that the number of factors = 5 and the number of components = NA ``` --- ## Extract loadings. You can further beautify this by generating labels for those 32 items. ``` r require(stargazer) require(plyr) factor_loadings<-as.data.frame(as.matrix.data.frame(fa_5$loadings)) factor_loadings<-plyr::rename(factor_loadings, c("V1"="Factor 1","V2"="Factor 2", "V3"="Factor 3", "V4"="Factor 4", "V5"="Factor 5")) stargazer(factor_loadings, summary = FALSE,out= "results_loadings.html", header=FALSE, type="html") ``` <table style="text-align:center"><tr><td colspan="6" style="border-bottom: 1px solid black"></td></tr><tr><td style="text-align:left"></td><td>Factor 1</td><td>Factor 2</td><td>Factor 3</td><td>Factor 4</td><td>Factor 5</td></tr> <tr><td colspan="6" style="border-bottom: 1px solid black"></td></tr><tr><td style="text-align:left">1</td><td>0.587</td><td>0.083</td><td>0.075</td><td>-0.110</td><td>0.301</td></tr> <tr><td style="text-align:left">2</td><td>-0.763</td><td>0.029</td><td>-0.023</td><td>0.124</td><td>0.163</td></tr> <tr><td style="text-align:left">3</td><td>0.022</td><td>0.603</td><td>0.086</td><td>-0.027</td><td>0.275</td></tr> <tr><td style="text-align:left">4</td><td>-0.196</td><td>-0.645</td><td>0.139</td><td>0.113</td><td>0.101</td></tr> <tr><td style="text-align:left">5</td><td>0.169</td><td>0.080</td><td>0.698</td><td>0.156</td><td>0.217</td></tr> <tr><td style="text-align:left">6</td><td>-0.025</td><td>0.021</td><td>-0.057</td><td>0.640</td><td>-0.169</td></tr> <tr><td style="text-align:left">7</td><td>0.161</td><td>0.008</td><td>0.775</td><td>0.007</td><td>0.264</td></tr> <tr><td style="text-align:left">8</td><td>-0.103</td><td>-0.313</td><td>0.032</td><td>0.609</td><td>-0.184</td></tr> <tr><td style="text-align:left">9</td><td>-0.011</td><td>0.107</td><td>0.091</td><td>-0.133</td><td>0.630</td></tr> <tr><td style="text-align:left">10</td><td>-0.020</td><td>0.134</td><td>-0.692</td><td>0.340</td><td>-0.070</td></tr> <tr><td style="text-align:left">11</td><td>-0.014</td><td>0.723</td><td>-0.001</td><td>0.041</td><td>0.153</td></tr> <tr><td style="text-align:left">12</td><td>-0.825</td><td>-0.084</td><td>-0.055</td><td>0.218</td><td>0.001</td></tr> <tr><td style="text-align:left">13</td><td>-0.268</td><td>-0.128</td><td>-0.119</td><td>0.505</td><td>-0.119</td></tr> <tr><td style="text-align:left">14</td><td>0.715</td><td>0.195</td><td>0.161</td><td>-0.003</td><td>-0.091</td></tr> <tr><td style="text-align:left">15</td><td>0.033</td><td>-0.630</td><td>0.045</td><td>0.090</td><td>0.090</td></tr> <tr><td style="text-align:left">16</td><td>0.067</td><td>0.127</td><td>0.065</td><td>-0.221</td><td>0.640</td></tr> <tr><td style="text-align:left">17</td><td>-0.159</td><td>-0.542</td><td>0.112</td><td>0.193</td><td>0.091</td></tr> <tr><td style="text-align:left">18</td><td>-0.500</td><td>-0.166</td><td>0.061</td><td>0.546</td><td>-0.158</td></tr> <tr><td style="text-align:left">19</td><td>0.177</td><td>0.051</td><td>0.731</td><td>0.046</td><td>0.131</td></tr> <tr><td style="text-align:left">20</td><td>-0.007</td><td>-0.729</td><td>0.059</td><td>0.234</td><td>0.018</td></tr> <tr><td style="text-align:left">21</td><td>0.052</td><td>0.144</td><td>0.146</td><td>-0.148</td><td>0.719</td></tr> <tr><td style="text-align:left">22</td><td>-0.715</td><td>0.009</td><td>-0.087</td><td>0.259</td><td>-0.098</td></tr> <tr><td style="text-align:left">23</td><td>0.187</td><td>0.676</td><td>0.072</td><td>0.026</td><td>0.126</td></tr> <tr><td style="text-align:left">24</td><td>-0.102</td><td>-0.180</td><td>-0.105</td><td>0.569</td><td>-0.280</td></tr> <tr><td style="text-align:left">25</td><td>0.799</td><td>0.098</td><td>0.171</td><td>0.159</td><td>0.004</td></tr> <tr><td style="text-align:left">26</td><td>-0.060</td><td>-0.757</td><td>-0.016</td><td>0.058</td><td>-0.057</td></tr> <tr><td style="text-align:left">27</td><td>0.087</td><td>-0.169</td><td>0.144</td><td>-0.111</td><td>0.575</td></tr> <tr><td style="text-align:left">28</td><td>0.036</td><td>0.470</td><td>-0.218</td><td>0.230</td><td>0.101</td></tr> <tr><td style="text-align:left">29</td><td>-0.027</td><td>0.250</td><td>-0.590</td><td>0.283</td><td>0.088</td></tr> <tr><td style="text-align:left">30</td><td>0.734</td><td>0.144</td><td>0.128</td><td>-0.092</td><td>0.272</td></tr> <tr><td style="text-align:left">31</td><td>0.362</td><td>0.459</td><td>0.217</td><td>-0.103</td><td>0.132</td></tr> <tr><td style="text-align:left">32</td><td>-0.130</td><td>0.132</td><td>-0.444</td><td>0.430</td><td>-0.022</td></tr> <tr><td colspan="6" style="border-bottom: 1px solid black"></td></tr></table> --- ## Plot loadings. ``` r require(GPArotation) plot(fa_5,labels=names(f_data),cex=.7, ylim=c(-.1,1)) ``` <!-- --> --- ## Too busy and not very useful. Just plot the first two factors, and those with loadings above .5. Label it. ``` r factor.plot(fa_5, choose=c(1,2), cut=0.5, labels=colnames(f_data)) ``` <!-- --> --- ## ggplot2. Alternative graphs, see [here](https://rpubs.com/danmirman/plotting_factor_analysis) --- ## Extensions. [Multiple factor analysis](http://www.sthda.com/english/articles/31-principal-component-methods-in-r-practical-guide/116-mfa-multiple-factor-analysis-in-r-essentials) (group-component) --- ## I just want Cronbach's Alpha... . Find out how to do it [here](https://personality-project.org/r/html/alpha.html) Perhaps you should [not](https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2792363/) rely on it too much? <img src="https://media.giphy.com/media/1zSz5MVw4zKg0/giphy.gif" width="300px" style="display: block; margin: auto;" /> --- ## Exercise Load the BFI data from the 'psych' package (??bfi). This contains data on 2800 participants completing items relating to the 'big five' from the IPIP pool. You'll have to subset the variables for your factor analysis. Conduct a Bartlett's test & KMO test. Conduct an exploratory factor analysis (using 'minres' as method), using parallel analysis, discuss the scree plot, Very Simple Structure and Velicer map test. --- ## Exercise (cont'd) Extract a five factor model (use varimax rotation), export the factor loadings of these five factors. Discuss the RMSEA and TLI for that five factor model. Make a plot for the factors. --- ## References (and further reading.) Also check the reading list! (many more than listed here) * Kline, P. (1993). _An Easy Guide to Factor Analysis_. London: Routledge. * Tabachnick, B.G. & Fidell, L.S. (2007). _Using Multivariate Statistics._ Boston, MA.: Pearson Education.